As AI reshapes industries and our lives, the burning question is how to benefit from AI's potential without jeopardizing our privacy.

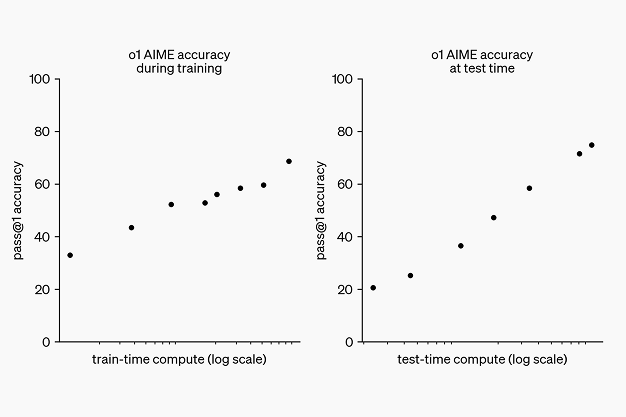

One newly emerging star in AI is DeepSeek, which has garnered attention with its innovative "inference-time computing" technology. Such an approach would let its models focus on only the most relevant aspects of a task, improving efficiency and cost-effectiveness.

However, its Chinese origins and open-source framework raise data security and privacy concerns. As DeepSeek gains speed, the argument over its place in advance of AI - and the risks that may come to privacy - has become more pertinent than ever.

DeepSeek

DeepSeek is a rapidly growing Chinese technology company redefining the landscape of artificial intelligence with its unique approach to developing affordable, open-source large language models (LLMs). DeepSeek aims to challenge traditional U.S. tech giants by focusing on cost efficiency and offering robust and accessible AI solutions to a broader market. One of its standout innovations is the DeepSeek model, which has garnered praise for its impressive information processing and response generation capabilities.

What truly distinguishes DeepSeek is its remarkable cost efficiency. The company asserts that it trained its model for a mere $6 million, utilizing 2,000 Nvidia H800 graphics processing units (GPUs). In stark contrast, the development of GPT-4 required an investment of $80 million to $100 million and the use of 16,000 H100 GPUs. This significant reduction in costs not only lowers the barrier to entry for businesses but also democratizes access to advanced AI tools.

DeepSeek's commitment to open-source principles allows developers and organizations to leverage its technology without the prohibitive costs typically associated with proprietary systems. This fosters a collaborative environment for innovation, enabling a diverse range of applications. The swift adoption of DeepSeek's technology highlights its potential impact; within just days of its launch, it soared to the top of free app charts in U.S. app stores, generated over 700 open-source derivatives, and was integrated into major platforms like Microsoft, AWS, and Nvidia AI.

Additionally, DeepSeek's models employ "inference-time computing," which activates only the necessary components for each specific task, further enhancing performance while minimizing operational costs.

Working of the DeepSeek’s Model

Data Collection and Preprocessing

The basis of a successful AI model resides in the quality and diversity of data it trains on. No exception is the DeepSeek model, which is trained on extensive datasets that contain all sorts of text sources, including books, articles, websites, and so much more. This diverse information enables the model to understand various contexts, styles, and nuances of human language.

Before training begins, the data undergoes a meticulous preprocessing phase. This step is essential for removing noise-irrelevant or erroneous information that could hinder the model's learning process. Tokenization, normalization, and filtering help ensure the data is clean and well-structured. The model aims for the highest quality of relevant content and thus can learn from a rich tapestry of language, thereby enhancing its capabilities for generating coherent and contextually appropriate responses. This preprocessing improves the model's performance and provides an ability to adapt to everything from casual conversation to technical writing.

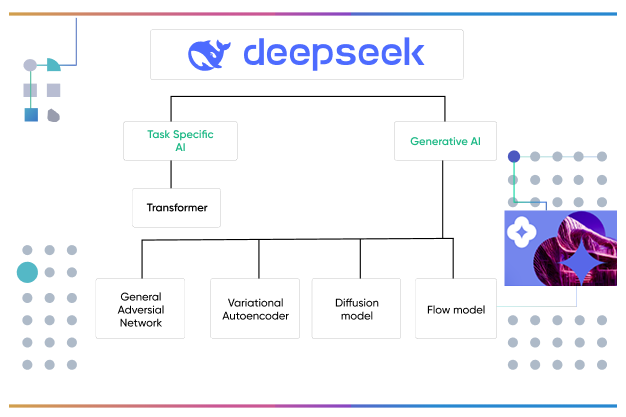

Model Architecture

At the heart of the DeepSeek model lies a sophisticated transformer architecture, a design that has revolutionized the field of natural language processing. Transformers are particularly adept at handling sequential data, making them ideal for tasks that involve understanding and generating human language. The architecture consists of multiple layers, each equipped with attention mechanisms that allow the model to focus on different input data segments.

This attention mechanism is a real game-changer; it makes it possible for the model to weigh the importance of various words and phrases about each other, giving rise to long dependencies often crucial for capturing context. In a sentence where the meaning of a word depends on some reference made several words earlier, the attention mechanism ensures that the model links that correctly. This capability improves the model's understanding and ability to generate contextually relevant and coherent responses. Therefore, the transformer architecture forms the core of the DeepSeek model, equipping it with the ability to perform well in various language tasks.

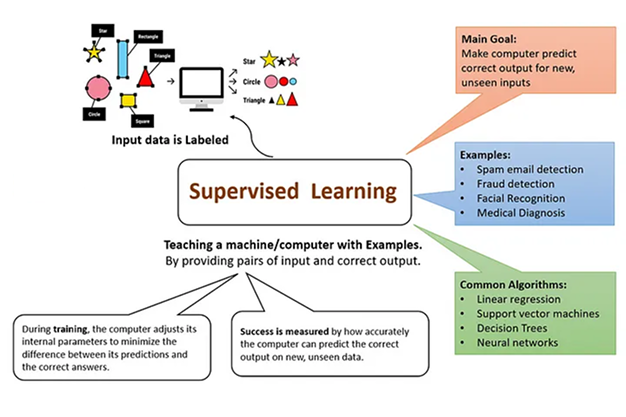

Training Process

The training of the DeepSeek model is a gargantuan exercise that taps into the power of 2,000 Nvidia H800 GPUs. These graphics processing units are designed explicitly for deep learning tasks and provide the computational muscle to process vast amounts of data efficiently. Their power comes from parallel processing, an attribute that allows this model to simultaneously learn from multiple data points, significantly accelerating the training timeline.

During the training session, the model undergoes a process called backpropagation, where internal parameters are modified by considering the difference between the actual outcomes and the predictions made by the model. This iteration helps refine the model in terms of understanding the language patterns and improving accuracy. The combination of extensive data, advanced GPU technology, and sophisticated training algorithms enables the DeepSeek model to achieve remarkable performance levels. Therefore, the model learns to generate text, understand subtleties, and capture complexity in human language, thus making it a highly potent tool for most applications.

Inference-Time Computing

One of the standout features of the DeepSeek model is its innovative approach to "inference-time computing." This technique is designed to optimize resource usage by activating only the relevant parts of the model for each specific query. Unlike traditional models that may run the entire architecture for every input, DeepSeek intelligently selects the necessary components to generate a response.

This targeted approach enhances the model's efficiency and significantly reduces operational costs. DeepSeek can deliver faster responses without sacrificing quality by minimizing the computational load. This is particularly useful in real-time applications where speed is vital, like chatbots or virtual assistants. Dynamic adjustments to the model operations based on the input query reflect significant technological advancements in AI to provide high-quality outputs while maintaining a lean operational footprint.

Response Generation

Once the DeepSeek model has processed the input, it generates a response. This process predicts the following tokens based on the learned patterns during training. The model uses language structure, context, and semantics knowledge to derive appropriate replies.

The output is then decoded into human-readable text to ensure the response is accurate, engaging, and relevant to the user's query. This capability is essential for applications ranging from customer support to creative writing, where the quality of the generated text can significantly impact user experience. The model can generate advanced and applicable responses. This indicates how well it was trained and developed, making it an asset in artificial intelligence.

Continuous Learning

The DeepSeek model is designed with a continuous learning framework that allows it to adapt and improve over time. This feature is significant in the rapidly evolving landscape of language and communication, where new terms, phrases, and contexts emerge regularly. By incorporating feedback from user interactions, the model can refine its responses and enhance its understanding of language nuances and user preferences.

This continuous learning process is facilitated through mechanisms that allow the model to analyze the effectiveness of its responses. For instance, if users provide feedback indicating a response was unhelpful or inaccurate, the model can adjust its parameters accordingly. This iterative feedback loop helps the model correct mistakes and enables it to learn from successful interactions, gradually improving its performance.

Moreover, the ability to learn from real-world usage means that the DeepSeek model can stay relevant in a dynamic environment. As language evolves and new topics emerge, the model can adapt its knowledge base, ensuring it can effectively generate relevant and timely responses.

Privacy Concerns over DeepSeek

DeepSeek faces several significant privacy risks, including weak protections against data leaks and the potential spread of hallucinations, which could lead to the rapid diffusion of disinformation. Additionally, its data strategies are concerning, as customer data is used for model training and stored in China, raising the risk of sensitive information being exposed to unauthorized access and regulatory scrutiny.

Thus, the concerns are evident because DeepSeek is less transparent about collecting, processing, and sharing user data. According to the privacy policy, the degree to which DeepSeek collects user data regarding chat history, device information, IP addresses, and other details raises red flags about potential misuse or privacy breaches. A particular concern is the possibility of government access to this data, especially given China's stringent cybersecurity laws, which could give the Chinese government precedence over the privacy of DeepSeek's users.

This is similar to the data practices of other Chinese tech companies, which have raised identical privacy concerns. The company has also experienced a recent data breach, where over one million sensitive records were exposed due to misconfigured cloud storage. This breach underscores the vulnerabilities in DeepSeek's data management strategies, posing significant risks, including identity theft and corporate espionage.

Conclusion

In conclusion, the DeepSeek model represents a significant leap forward in artificial intelligence, showcasing the transformative potential of innovative technologies in reshaping our interactions with AI. While it offers exciting possibilities, it also highlights the critical need to address privacy concerns to build and maintain user trust. As DeepSeek continues to evolve, it paves the way for future AI advancements - one where intelligent system enhances our daily lives and do so with a strong commitment to ethical principles. For any digital transformation company, this marks a pivotal moment to rethink how AI can be responsibly integrated into products, processes, and experiences. The journey is far from over, and DeepSeek stands as a formidable force in the ongoing evolution of AI.

Unlock Your Digital Potential Today!

Don’t just keep up, lead your industry.

Connect with DigiMantra’s top strategists and AI, web, and software experts to boost growth, streamline operations, and drive innovation.

Your transformation starts here.